At DeepCrawl I helped debug thousands of technical SEO issues each year on some of the largest enterprise websites in the world.

I created a Googlebot simulator in Chrome to quickly replicate and debug complex technical SEO issues. I called it the Chromebot technique.

In this guide, I’m going to explain how to make your own Googlebot simulator in Google Chrome to debug complex technical SEO issues.

Click here to jump to the Googlebot Chrome technique.

What is the Chromebot technique?

The Chromebot technique is a simple non-code solution which allows a human to configure chrome settings so they act like a Googlebot crawler (not rendering).

It can help SEO specialists identify unique crawling and indexing issues on a website.

Why use this technique?

I’ve used this technique a lot at DeepCrawl when debugging countless client crawling and indexing issues.

It’s a fairly simple but effective non-code technique to help technical SEOs think more like a search engine crawler and less like a human.

Many websites can do funny things when Googlebot users request pages.

How do you know Googlebot crawler’s settings?

All of the settings are based on the time I spent chatting with engineers, studying the documentation around Googlebot, and updating DeepCrawl’s Page Rendering Service documentation.

I’ve listed the original documents that I’ve based the settings on:

- Fix Search-related JavaScript problems

- Understand rendering on Google Search

- JavaScript and SEO: The Difference Between Crawling and Indexing

What do you need for this technique?

All you need is Google Chrome Canary and a Virtual Private Network (VPN).

Why simulate Googlebot in Google Chrome?

There are four core benefits to using this technique which I will briefly explain.

1. Debugging in Google Chrome

I have debugged hundreds of websites in my time at DeepCrawl. Third party web crawling tools are amazing but I’ve always found that they have limits.

When trying to interpret results from these tools I always turn to Chrome to help understand and debug complex issues.

Google Chrome is still my favourite non-SEO tool to debug issues and when configured it can even simulate Googlebot to validate what crawling tools are picking up.

2. Googlebot uses Chromium

Gary clarified that Googlebot uses its own custom built solution for fetching and downloading content from the web. Which is then passed onto the indexing systems.

There is no evidence to suggest that Googlebot crawler uses Chromium or Chrome, however, Joshua Giardino at IPullRank makes a great argument about Google using Chromium to create a browser based web crawler.

Google Chrome is also based on the open-source Chromium project, as well as many other browsers.

It makes sense then to use a Chromium browser to simulate Googlebot web crawling to better understand your website.

3. Unique SEO insights

Using Google Chrome to quickly interpret web pages like Googlebot can help to better understand exactly why there are crawling or indexing issues in minutes.

Rather than spending time waiting for a web crawler to finishing running, I can use this technique to quickly debug potential crawling and indexing.

I then use the crawling data to see the extent of an issue.

4. Googlebot isn’t human

The web is becoming more complex and dynamic.

It’s important to remember that when debugging crawling and indexing issues you are a human and Googlebot is a machine. Many modern sites treat these two users differently.

Google Chrome which was designed to help humans navigation the web, can now help a human view a site like a bot.

How to setup Googlebot simulator

Right, enough of the why. Let me explain how to create your own Googlebot simulator.

Download Google Chrome

I’d recommend downloading Chrome Canary and not using your own Google Chrome browser (or if you’ve switched to Firefox then use Google Chrome).

The main reason for this is because you will be changing browser settings which can be a pain if you forget to reset them or have a million tabs open. Save yourself some time and just use Canary as your dedicated Googlebot simulator.

Download or use a VPN

If you are outside the United States then make sure you have access to a Virtual Private Network (VPN), so you can switch your IP address to the US.

This is because by default Googlebot crawls from the US, and to truly simulate crawl behaviour you have to pretend to be accessing a site from the US.

Chrome Settings

Once you have these downloaded and set up it’s time to configure Chrome settings.

I have provided an explanation of why you need to configure each setting but the original idea of using Chromebot came to me when I rewrote the Page Rendering Service guide.

Web Dev Tools

The Web Developer Tools UI is an important part of viewing your website like Googlebot. To make sure you can navigate around the console you will need to move the Web Dev Tools into a separate window.

Remember that your DevTools window is linked to the tab you opened it in. If you close that tab in Google Chrome the settings and DevTools window will also close.

It is very simple to do this, all you need to do is:

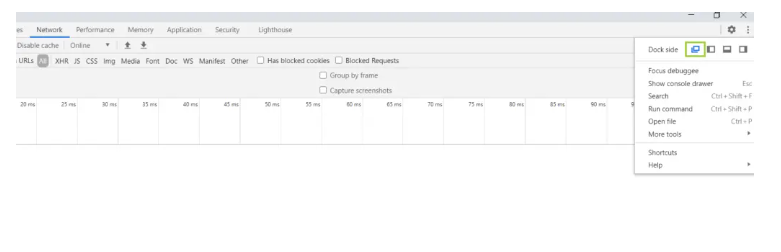

- Right-click on a web page and click inspect element (or CTRL+SHIFT+I)

- Navigate to the right side, click on the 3 vertical dots, and select the far left dockside option.

The Web Dev Tool console is now in a separate window.

User-agent token

A user-agent string – or line of text – is a way for applications to identify themselves to servers or networks. To simulate Googlebot we need to update the browser’s user-agent to let a website know we are Google’s web crawler.

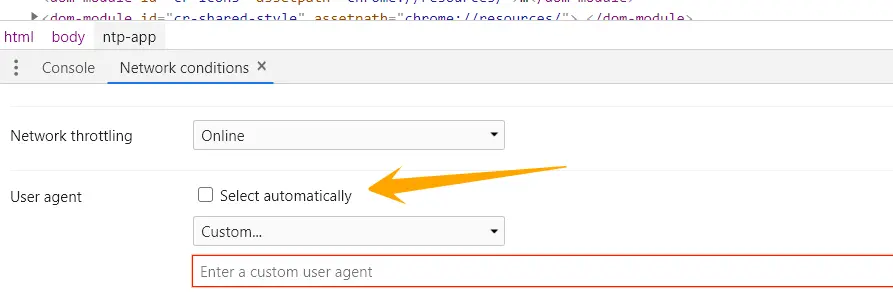

Command Menu

Use the Command Menu (CTRL + Shift + P) and type “Show network conditions” to open the network condition tab in DevTools and update the user-agent.

Manual

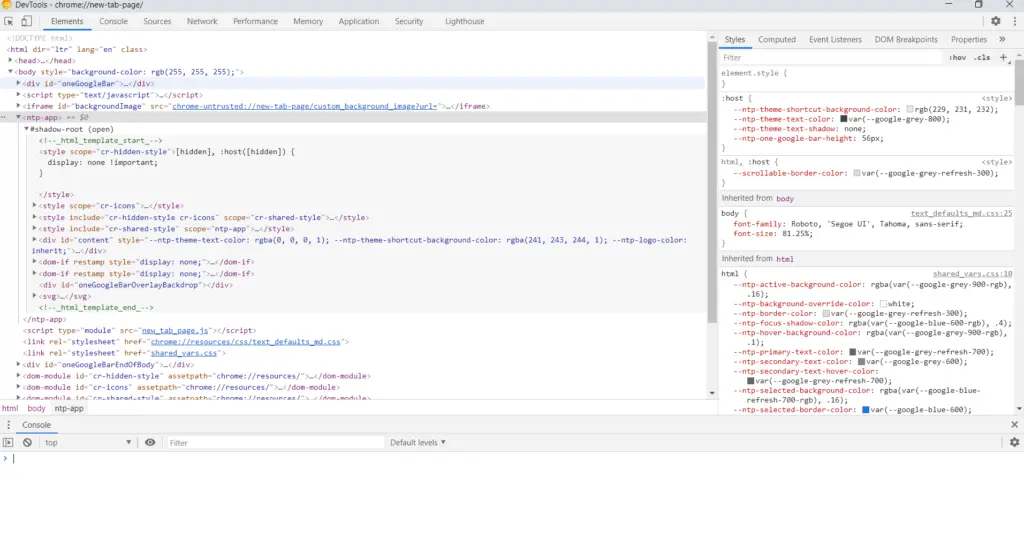

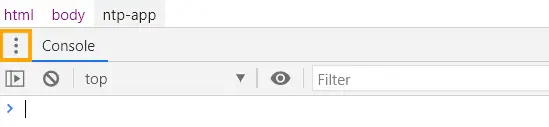

To do this, navigate to the separate Web Dev Tools window and press the Esc button. This will open up the console.

Click on the three little buttons on the left of the console tab.

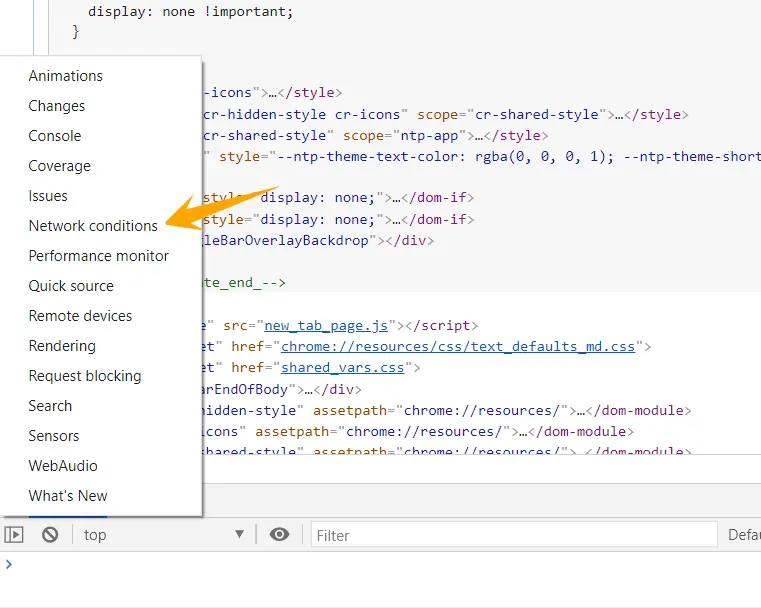

In the list of options, click on the network conditions. This will open the network conditions tab next to the console tab.

In the network conditions tab scroll down and untick the ‘user-agent select automatically’ option.

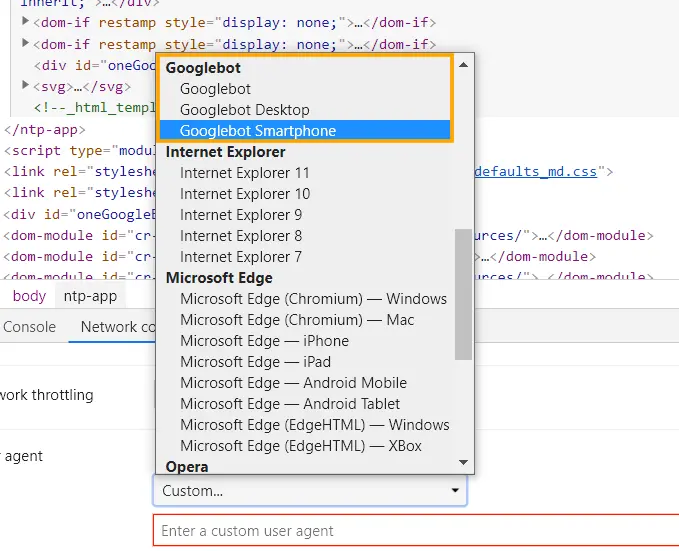

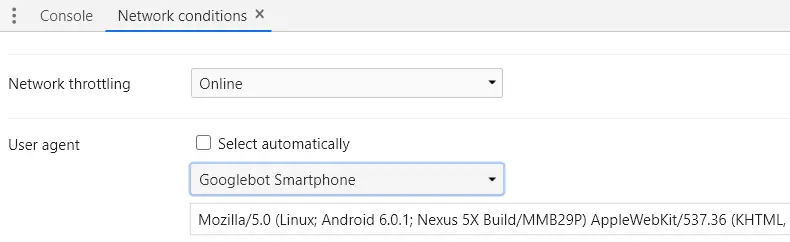

Google Chrome will now allow you to change the user-agent string of your browser to Googlebot or Googlebot Mobile.

I usually set it to Googlebot Mobile with mobile-indexing by default. Although I’d recommend checking in Google Search Console to see which Googlebot crawls your website most often.

The Googlebot user-agent will use the dev beta Chrome version, not the stable version, automatically. This isn’t usually an issue for 99% of websites but if you need to you can input the custom UA from stable Chrome.

Now you’ve changed the user-agent, close the console (press ESC again).

Enable stateless crawling

Googlebot crawls web pages stateless across page loads.

The Google Search developer documentation states that this means that each new page crawled uses a fresh browser and does not use the cache, cookies, or location to discover and crawl web pages.

Our Googlebot simulator also needs to replicate being stateless (as much as it can) across each new page loaded. To do this you’ll need to disable the cache, cookies, and location in your Chrome.

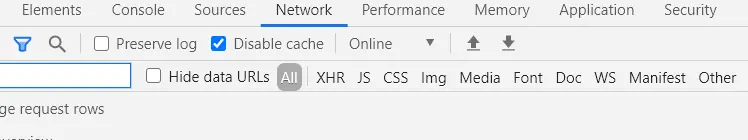

Disable the cache

Command Menu

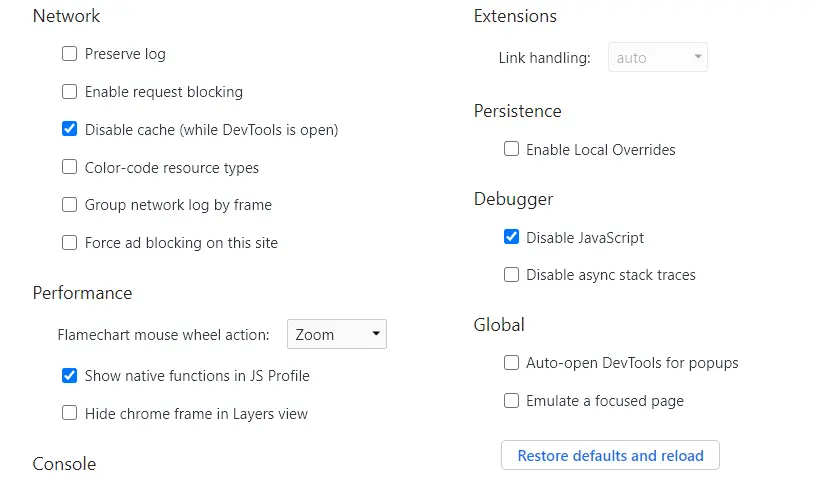

Use the Command Menu (CTRL + Shift + P) and type “Disable Cache” to disable the cache when DevTools is open.

Manual

To disable the cache go to the Network panel in DevTools and check the ‘Disable cache’.

Disable cookies

Command Menu

Use the Command Menu (CTRL + Shift + P) and type “Disable Cache” to disable the cache when DevTools is open.

Manual

In Chrome navigate to chrome://settings/cookies. In the cookies settings choose the option to “Block third-party cookies”.

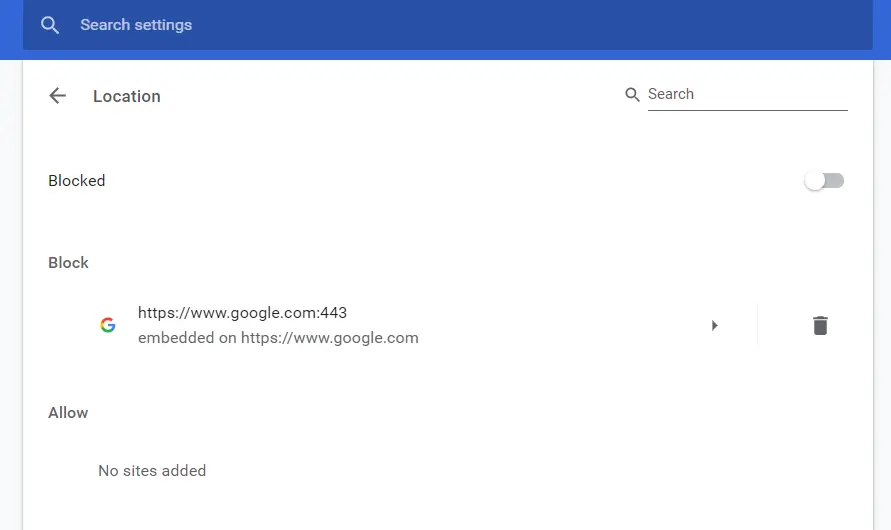

Disabling location

In Chrome navigate to the chrome://settings/content/location in your browser. Toggle the “Ask before accessing (recommended)” to “Blocked”.

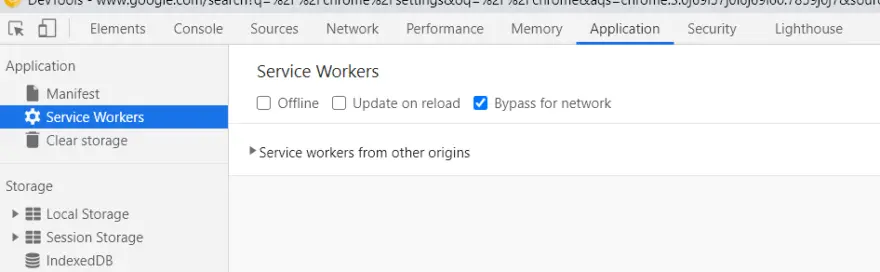

Disable Service Workers

Googlebot disables interfaces relying on the Service Worker specification. This means it bypasses the Service Worker which might cache data and fetches URLs from the server.

To do this navigate to the Application panel in DevTools, go to Service Workers, and check the ‘Bypass the network’ option.

Once disabled the browser will be forced to always request a resource from the network and not use a Service Worker.

Disable JavaScript

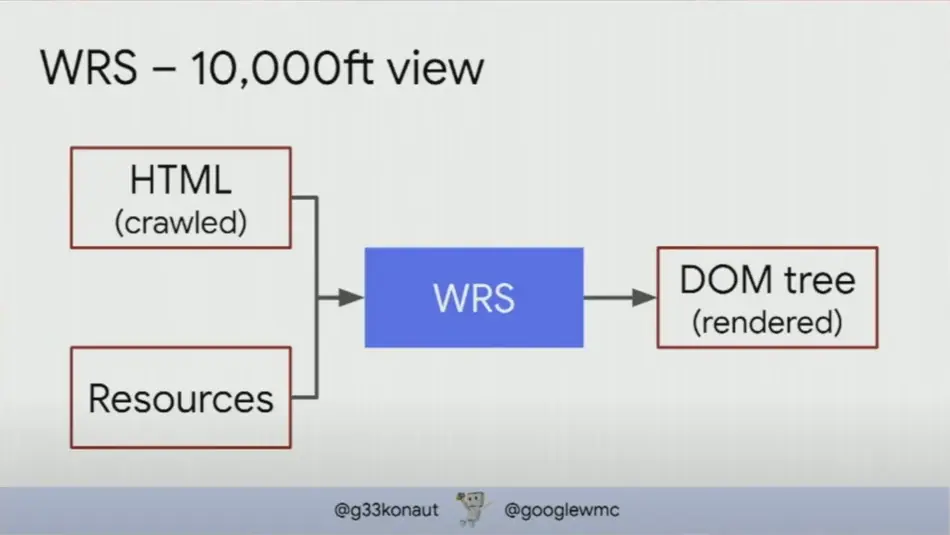

The Googlebot crawler does not execute any JavaScript when crawling.

The crawling and rendering sub-systems are further explained in the Understand the JavaScript SEO basics guide and Googlebot & JavaScript: A Closer Look at the WRS at TechSEO Boost 2019.

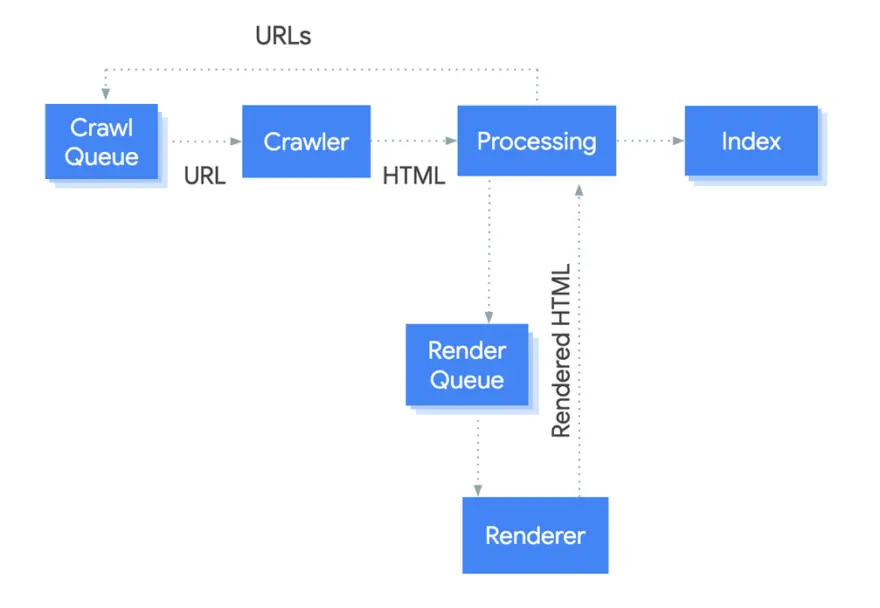

Googlebot is a very complex system and even this diagram above is an oversimplification. However; the Googlebot crawler must first fetch, download, and inspect a web page regardless of rendering.

For more information on how to diagnose rendering issues check out my How to Debug JavaScript SEO issues in Chrome.

It’s important to make sure we can inspect server-side HTML, http status codes, and resources without JavaScript in our Googlebot simulator.

Command Line

Use the Command Menu (CTRL + Shift + P) and type “Disable JavaScript” to quickly disable JavaScript.

Manual

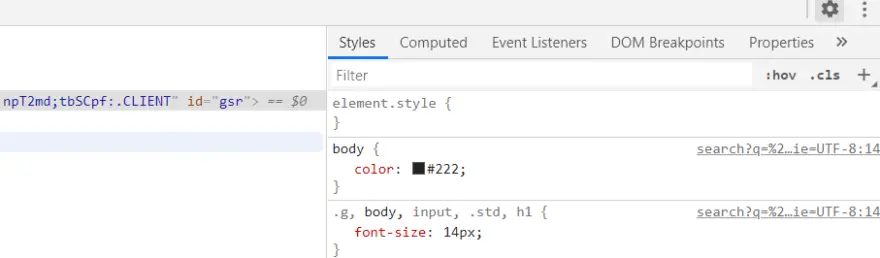

To disable JavaScript in Chrome, navigate to DevTools and click on the settings cog.

Then check the ‘Disable JavaScript’ box.

Now when you use your Googlebot simulator you’ll only be inspecting the initial server-side HTML. This will help to better understand if there is any link, content, or HTTP status code issues causing the crawler problems.

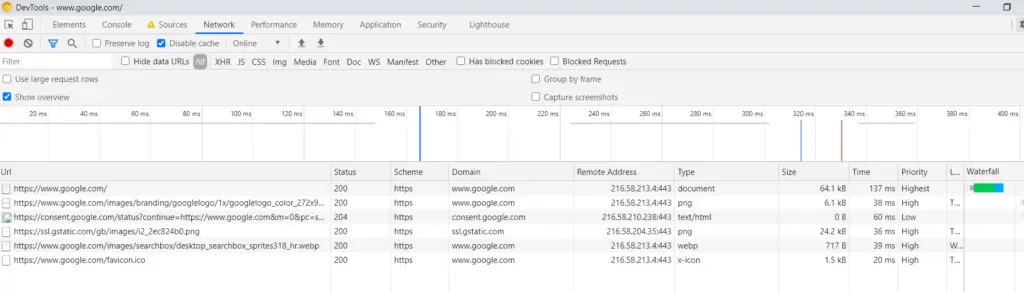

Network Panel

Finally, it is time to configure the Network panel. It is in this area in DevTools where you will be spending a lot of time as Googlebot.

The Network panel is used to make sure resources are being fetched and downloaded. It is in this panel that you can inspect the metadata, http headers, content, etc of each individual URL downloaded when requesting a page.

However; before we can inspect the resources (HTML, CSS, IMG) downloaded from the server like Googlebot we need to update the headers to display the most important information in the panel.

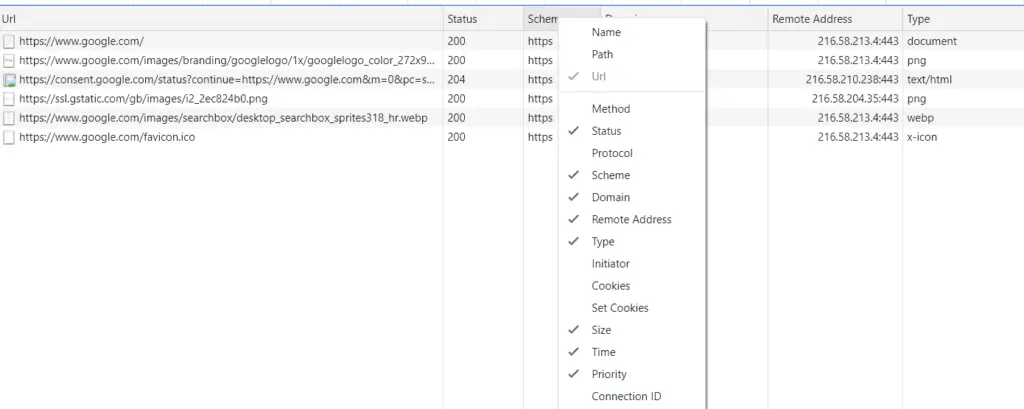

Go to the Network panel in DevTools (now a separate window). On the table in the panel right click on the column headers and select the headings listed below to be added as columns in the network panel (remove any others not listed).

I have also provided a brief explanation of each heading and why they should be added.

Status

The https status code of the URL being downloaded from the server. Googlebot will alter its behaviour of crawling depending on the type of http status code – one of the most critical pieces of information to understand when auditing URLs.

Scheme

Displays the unsecure https:// or secure https:// scheme of the resource being downloaded. Googlebot prefers to crawl and index HTTPS URLs so it’s important to get a good understanding of the scheme being used by resources on a page.

Domain

Displays the domain where the resources were downloaded. It’s important to understand if important content relies on an external CDN, API, or subdomain as Googlebot might have trouble fetching the content.

Remote address

Google Chrome lists the IP address of the host where the resources are being downloaded. As the crawl budget of a website is based on the IP address of the host and not on the domain, it is important to also take into account the IP address of each URL fetched.

Type

The MIME type of the requested resource. It’s important to make sure important URLs are labeled with the correct MIME type as different types of Googlebot are interested in different types of content (HTML, CSS, IMG).

Size

The combined size of the response headers plus the response body, as delivered by the server. It’s important to improve the site speed of a website, as this can help both your users and Googlebot access your site quicker.

Time

The total duration, from the start of the request to the receipt of the final byte in the response. The response of your server can affect the crawl rate limit of Googlebot. If the server slows down then the web crawler will crawl your website less.

Priority

The best-guess of the browser of which resources to load first. This is not how Googlebot crawls prioritises URLs to crawl but it can be useful to see which resources are prioritised by the browser (using its own heuristics).

Last Modified

The Last-Modified response HTTP header contains the date and time at which the origin server believes the resource was last modified. This response can be used by Googlebot, in combination with other signals, to help prioritize crawling on a site.

US IP Address

Once you have updated the Network panel headers in Chrome DevTools your Googlebot simulator is almost ready.

If you want to use it straight away you need to switch to a US IP address.

Googlebot crawls from the United States of America. For this reason, I’d always recommend changing your IP address to the US when using your Googlebot simulator.

It’s the best way to understand how your website behaves when visited by Googlebot. For example, if a site is blocking visitors with US IP addresses or geo-redirects visitors based on their location, this might cause issues with Google crawling and indexing a website.

I, Googlebot Chrome

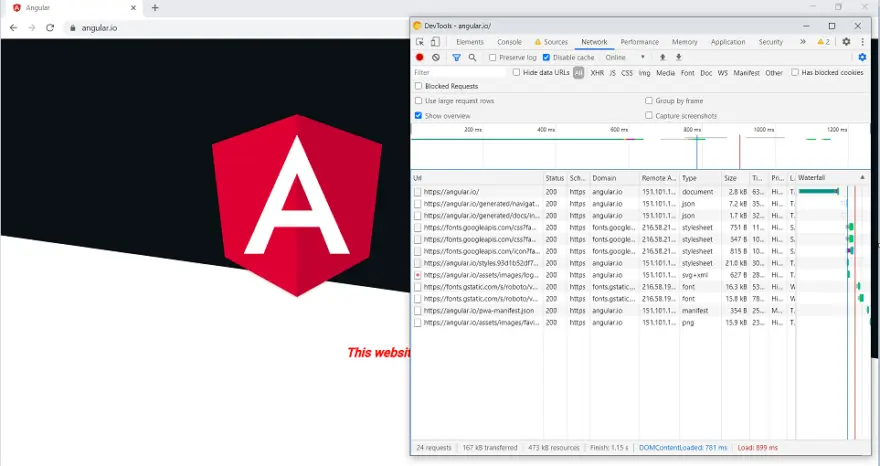

Once your IP address is switched you are ready to go and have your own Googlebot simulator.

If you want to test to see if it works, go to angular.io or eventbrite.com. These websites require JavaScript to load content and links – with JavaScript disabled these sites won’t load content properly in the interface.

Frequently Asked Questions

Does the simulator work for just one tab?

Yes. Google DevTool settings are just for the tab you currently have opened. Opening a new tab will cause the Disable JavaScript and User-agent settings to be reset.

Other Chrome based settings (cookies, service workers) will still be configured.

Does this help to debug JavaScript SEO issues?

Yes this technique can be used to debug JavaScript SEO issues on a website when comparing view-source to rendered HTML. Although there might be better extensions and tools to do this at scale.

Do I need to update the settings every time?

Once your tab is closed you’ll need to update the following settings:

- Disable JavaScript

- Update User-agent token

All other settings will have been saved by the browser.

Why do I need to use Chrome Canary?

I only suggest using this to stop you from messing up your Chrome browser and having to spend time going back and forth between settings.

If you use Firefox or Safari then just download the normal Google Chrome.

I’ve already built this in headless chrome or through some other automation?

First off, well done! If you’re like me and don’t (currently) have the time/capacity to learn new coding languages then this non-code method is great to get started.